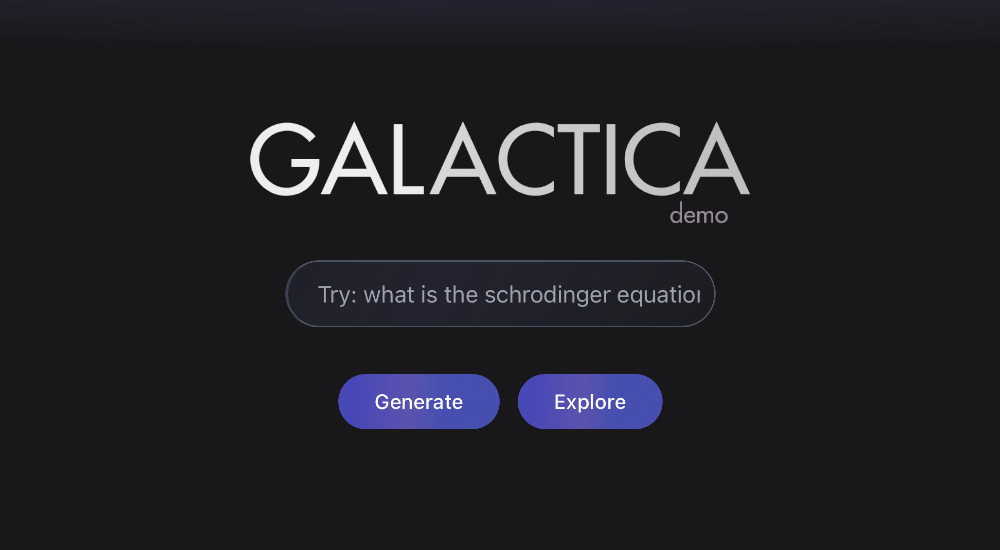

On Tuesday, Meta AI showcased Galactica, a big language model built to “store, mix, and reason about scientific information.” Although it was designed to speed up the production of scholarly articles, test subjects found that it could also produce plausible fakery. MIT Technology Review claims that Meta pulled the demo after receiving ethical objections for several days.

OpenAI’s GPT-3 is an example of a large language model (LLM) that learns to write by analyzing massive amounts of data and analyzing the statistical links between words. In this way, they might write papers that seem plausible on the surface but are actually full of inaccuracies and damaging generalizations.

Because of their capacity to successfully parrot language without understanding its meaning, critics have dubbed LLMs “stochastic parrots.” Galactica is an M.L.S. program with a focus on scientific writing. Galactica was taught by its creators using “a massive and curated corpus of humanity’s scientific knowledge,”

which included more than 48 million pieces of written work like academic journals, textbooks, lecture notes, scientific websites, and encyclopedias. Researchers at Meta AI, according to the Galactica paper, thought that this supposedly high-quality data would provide high-quality results.

I asked #Galactica about some things I know about and I'm troubled. In all cases, it was wrong or biased but sounded right and authoritative. I think it's dangerous. Here are a few of my experiments and my analysis of my concerns. (1/9)

— Michael Black (@Michael_J_Black) November 17, 2022

On Tuesday, users may visit the Galactica website and, based on examples supplied, write in prompts to generate papers including literature reviews, wiki entries, lecture notes, and responses to questions. A “new interface to access and manipulate what we know about the universe” was how the site framed the model.

Although some saw potential and utility in the demo, others quickly realized that by entering racist or otherwise inappropriate prompts, anyone could produce what appeared to be credible, official-sounding literature on certain issues. Someone used it to write a wiki article about a made-up study titled “The benefits of ingesting smashed glass,” for instance.

Read More: Thunderbolt Port On iPhone 15 Pro Rumored To Have Significant Advantages Over Lightning!

When Galactica’s output wasn’t violating social standards, it was still capable of attacking commonly accepted scientific facts, such as by spouting wrong dates or animal names, which only a specialist would be able to spot. Thus, on Thursday, Meta removed the Galactica demo.

As a result, Yann LeCun, Meta’s Chief AI Scientist, tweeted “Unfortunately, the Galactica demo is currently offline. In other words, you can no longer make light of the situation by using it incorrectly. Happy?” This incident brings to mind a recurrent ethical question in artificial intelligence: who bears the responsibility for preventing the inappropriate use of potentially damaging generative models,

Read More: What is Discord? What Separates Discord From Other Platforms?

The general public or the publishers who make them available? The middle ground where actual business is conducted is likely to differ culturally and with the development of deep learning models. The answer may be heavily influenced by government regulation.